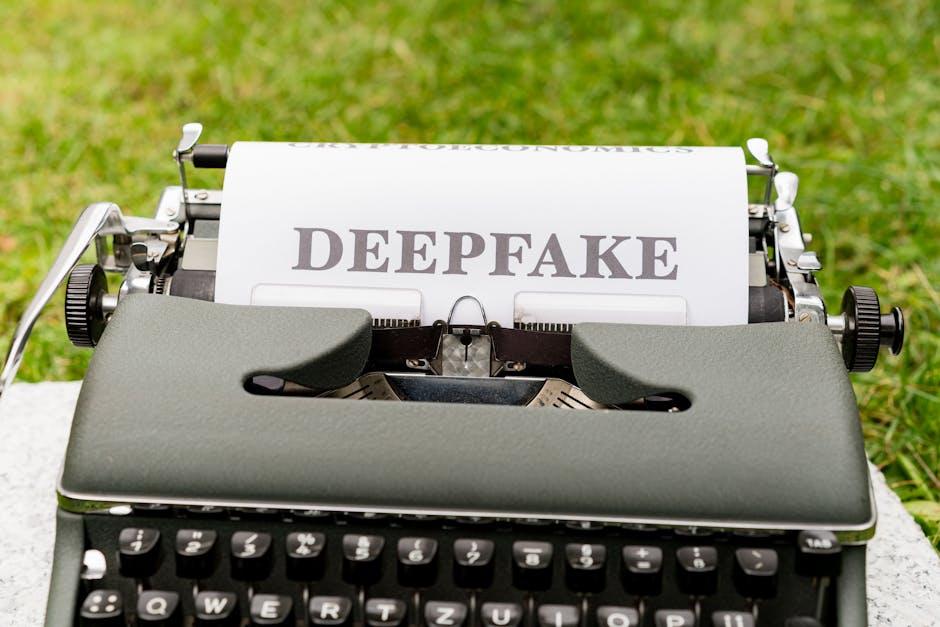

In an age where the line between reality and fabrication blurs with every click, deepfake technology stands both as a marvel and a menace. The rise of hyper-realistic synthetic media has shattered traditional notions of trust in digital content, ushering in a new era of challenges and opportunities. At the forefront of this battleground are deepfake detection tools-digital sentinels striving to unmask deception hidden beneath pixel-perfect veneers. Yet, as these tools evolve, they bring forth complex ethical questions that ripple far beyond mere technical accuracy. How do we balance the protection of truth with the rights to privacy, consent, and innovation? This article journeys into the nuanced ethics of deepfake detection, exploring the promises, perils, and moral quandaries that underpin our quest to safeguard reality in an increasingly artificial world.

Understanding the Moral Landscape of Deepfake Detection

The rise of deepfake detection tools places society at a complex ethical crossroads, where the desire to protect truth clashes with the potential for misuse of these technologies. At their core, these tools serve as guardians of authenticity, striving to uphold trust in media that shapes public opinion and personal reputations. However, the deployment of these tools must navigate ethical concerns such as privacy infringement, the risk of false positives, and the potential to marginalize creators who engage in legitimate digital manipulation for satire, education, or art. Balancing these concerns requires transparent algorithms, clear consent protocols, and accountability to ensure that the quest for truth does not inadvertently become a tool for censorship or discrimination.

When evaluating the moral impact of deepfake detection, several key factors emerge:

- Transparency: Users must understand how detection algorithms work and their limitations to avoid blind trust in automated judgments.

- Fairness: Ensuring detection tools do not disproportionately target or misclassify specific demographic groups.

- Privacy: Respecting the rights of individuals whose images or voices are analyzed during detection processes.

- Accountability: Providing clear avenues for contesting false detections and improving system accuracy.

| Ethical Consideration | Potential Risk | Mitigation Strategy |

|---|---|---|

| False Positives | Wrongful labeling of authentic content | Continuous algorithm training and human review |

| Privacy Violation | Unauthorized surveillance or data use | Strict data governance and user consent |

| Bias | Unequal detection accuracy across groups | Diverse training data and fairness audits |

Balancing Privacy Concerns with Technological Innovation

As technology accelerates, the tension between protecting individual privacy and pushing innovation forward becomes more pronounced. Deepfake detection tools, while instrumental in combating misinformation, must navigate the delicate balance of surveilling digital content without infringing on personal freedoms. These systems often require access to vast amounts of sensitive data, raising questions about data storage, user consent, and the potential misuse of biometric information. Ensuring transparency about how data is collected and analyzed is crucial to maintaining public trust. Moreover, developers must consider implementing privacy-preserving techniques, such as differential privacy or federated learning, to innovate responsibly while minimizing exposure to individual data.

In the quest to safeguard society from the dangers of manipulated media, technology must not lose sight of ethical implications. Stakeholders, including governments, tech companies, and civil society, should collaborate to establish clear guidelines that uphold both security and civil liberties. Consider the following key principles that can help harmonize privacy with innovation:

- Data Minimization: Collect only the data necessary for detection purposes and avoid excess retention.

- Accountability: Implement regular audits and transparent reporting on detection tool usage.

- User Empowerment: Provide individuals with the option to control their data and receive notifications on its use.

- Ethical Oversight: Engage multidisciplinary ethics boards to review the impact and fairness of detection algorithms.

| Challenge | Privacy Impact | Innovative Solution |

|---|---|---|

| Mass Data Collection | Risk of identity exposure | Federated learning |

| Real-Time Analysis | Continuous surveillance concerns | Edge computing |

| Algorithmic Bias | Unfair targeting of groups | Inclusive datasets |

Evaluating the Impact of Detection Tools on Free Expression

Detection tools, designed to combat misleading content, carry significant implications for the realm of free expression. On one hand, these technologies empower platforms and users to quickly identify and mitigate the spread of manipulated media, helping maintain trust and authenticity in digital conversations. Yet, the very act of flagging or removing content-even when automated-can inadvertently suppress legitimate forms of artistic expression, satire, or political dissent. This delicate balance creates a battleground where the pursuit of truth must coexist with the preservation of open dialogue.

Moreover, the deployment of detection tools risks encouraging a form of surveillance culture, where creators may self-censor to avoid wrongful accusations or algorithmic biases. Key concerns include:

- False positives: Genuine creations misinterpreted as deepfakes.

- Chilling effect: Reduced willingness to experiment or critique.

- Transparency challenges: Limited clarity on detection criteria.

Consider the following comparison of outcomes when detection tools are implemented rigidly versus with nuanced guidelines:

| Approach | Potential Impact | Effect on Free Expression |

|---|---|---|

| Rigid Enforcement | High accuracy but harsh content removal | Significant suppression, risk of censorship |

| Contextual Nuance | Moderate accuracy with case reviews | Balanced protection with creative freedom |

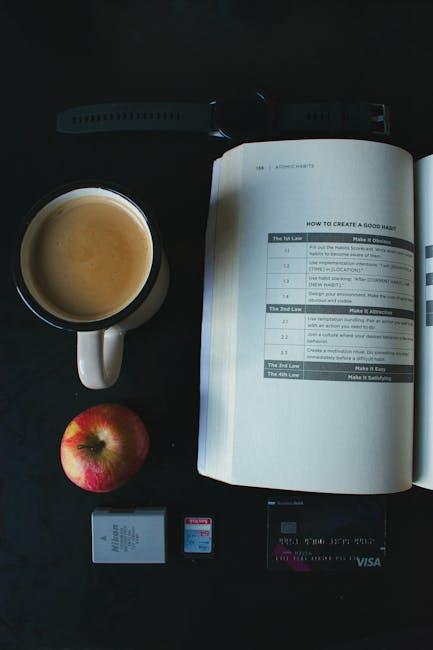

Guidelines for Ethical Deployment and Responsible Use

Deploying deepfake detection tools carries immense responsibility, demanding a commitment to transparency and respect for privacy. Organizations and developers must ensure these technologies are used to empower individuals and safeguard truth without infringing on personal rights or enabling surveillance overreach. Guidelines should emphasize informed consent, clear communication about data usage, and robust safeguards against misuse. Equally important is ongoing collaboration with ethicists, legal experts, and affected communities to adapt standards as technology evolves.

To foster trust and accountability, consider adhering to these core principles:

- Fairness: Avoid biases that may lead to disproportionate impacts on specific groups.

- Accuracy: Prioritize precision to minimize false positives and negatives.

- Transparency: Clearly disclose how detection decisions are made and what limitations exist.

- Accountability: Implement mechanisms for redress and independent audits of tool effectiveness.

| Ethical Principle | Practical Action |

|---|---|

| Privacy Protection | Encrypt data and use anonymization |

| Bias Mitigation | Train on diverse datasets |

| Transparency | Publish algorithm details |

| User Consent | Clear opt-in mechanisms |

In Conclusion

As the lines between reality and fabrication blur ever further, the tools we craft to detect deepfakes become guardians of truth in a digital age fraught with uncertainty. Yet, in wielding these technologies, we must tread carefully-balancing the imperative to expose falsehoods with respect for privacy, consent, and the complexities of human expression. The ethics of deepfake detection are not merely about machines catching fakes; they are about steering the future of authenticity itself. In this uncertain terrain, our vigilance, discernment, and values will guide how technology shapes the stories we trust.